Deyu Zhou 周德宇

Email: dzhou861[at]connect.hkust-gz.edu.cn

I research interactive world models, driven by the lifelong goal of using AI for social good.

I'm always excited to discuss new ideas, especially the crazy ones. Don't hesitate to get in touch!

Ph.D. candidate @ HKUST-GZ, supervised by Prof. Harry SHUM and Prof. Lionel NI.

Selective Projects

Huawei · Face Blur Detection, Virtual Makeup (2019)

Tencent AI Lab · Emotion Classification, Neural Machine Translation (2020-2021)

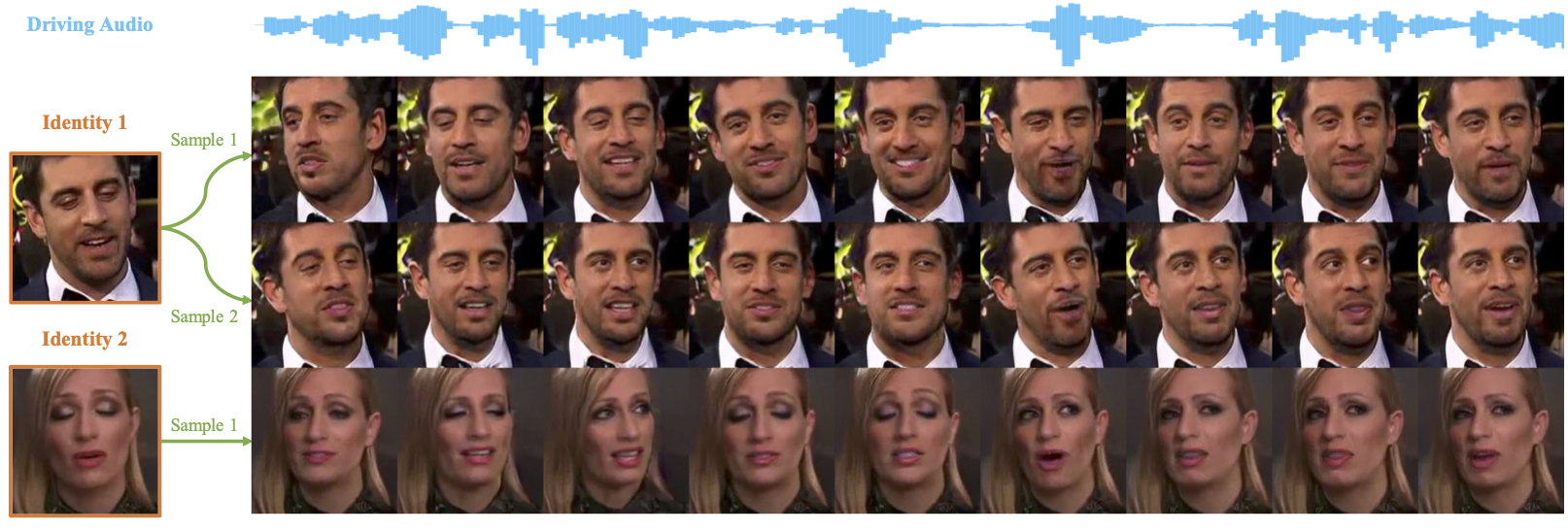

Xiaobing · Multimodal Conversation, Audio-driven Talking Head Generation (2021-2022)

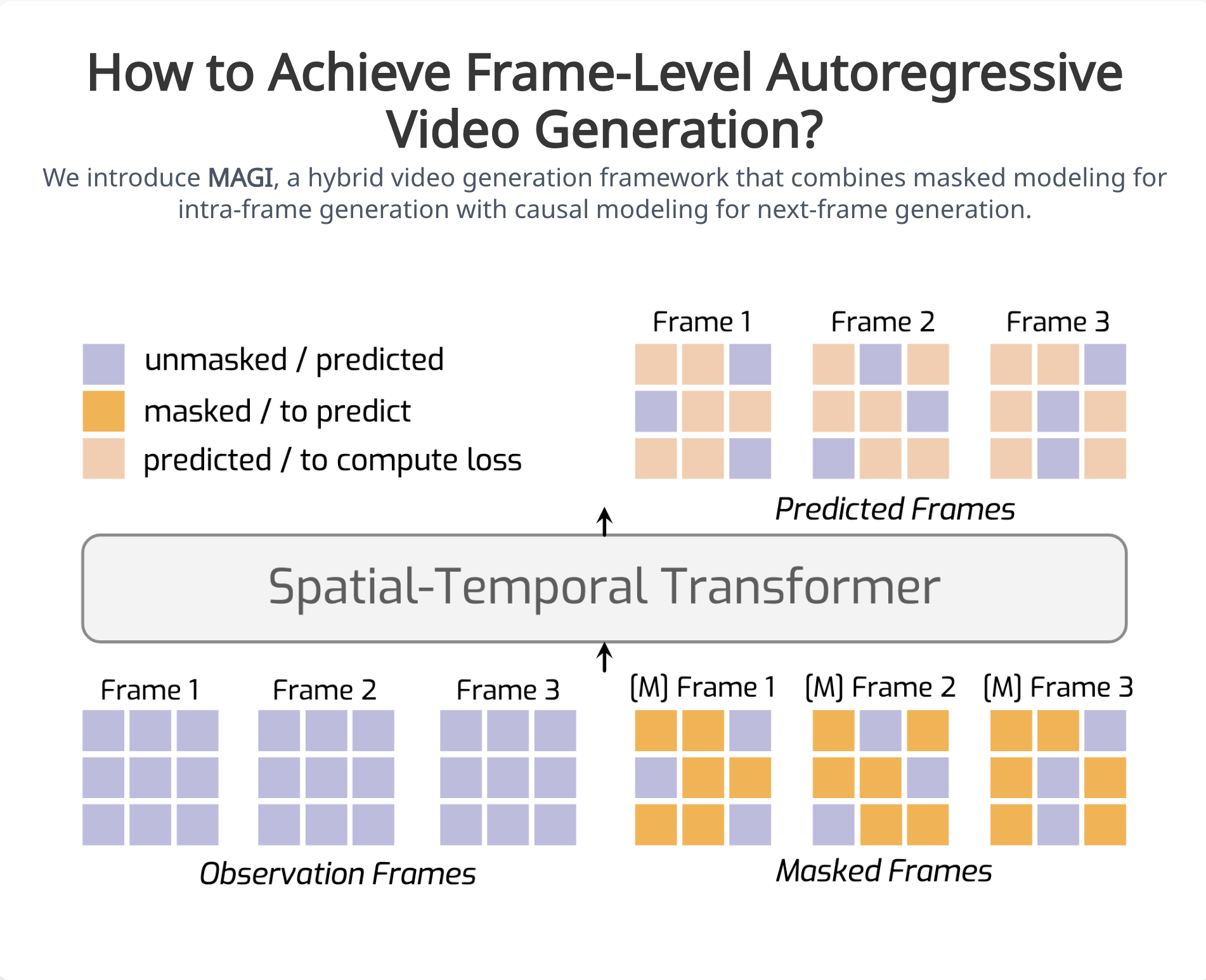

Step Fun · Text-to-Video Generation, Autoregressive Video Generation (2024)

Mentors & Collaborators

I have been very fortunate to work with and learn from Dr. Chen Dong, Dr. Shuangzhi Wu, Dr. Zhaopeng Tu, Dr. Baoyuan Wang, Dr. Duomin Wang, Dr. Yu Deng, Dr. Quan Sun, Dr. Zheng Ge, Dr. Nan Duan and Dr. Xiangyu Zhang.

Research Highlights

TH-PAD: Talking Head Generation with Probabilistic Audio-to-Visual Diffusion Priors

We introduce a novel framework for one-shot audio-driven talking head generation. Unlike prior works that require additional driving sources for controlled synthesis in a deterministic manner, we instead sample all holistic lip-irrelevant facial motions (i.e. pose, expression, blink, gaze, etc.) to semantically match the input audio while still maintaining both the photo-realism of audio-lip synchronization and overall naturalness.